Methods

A compact overview of the techniques I use in NLP/ML projects.

Classical ML baselines

TF–IDF / BoW → Logistic Regression, Linear SVM; fastText as stronger baselines. Stratified k-fold, macro-F1 / PR-AUC, confusion matrices, slice-based error analysis.

Hyperparameter optimisation

Grid/Random vs. Optuna/Bayesian search; early stopping; LR schedules (OneCycle, cosine), warmup; fp16/bf16, grad accumulation & clipping.

Transformer fine-tuning

huBERT / HunEmBERT / XLM-R; classification & sequence tagging; class imbalance (focal loss, reweighting); layer-wise LR; freeze/unfreeze; efficient fine-tuning (LoRA/PEFT).

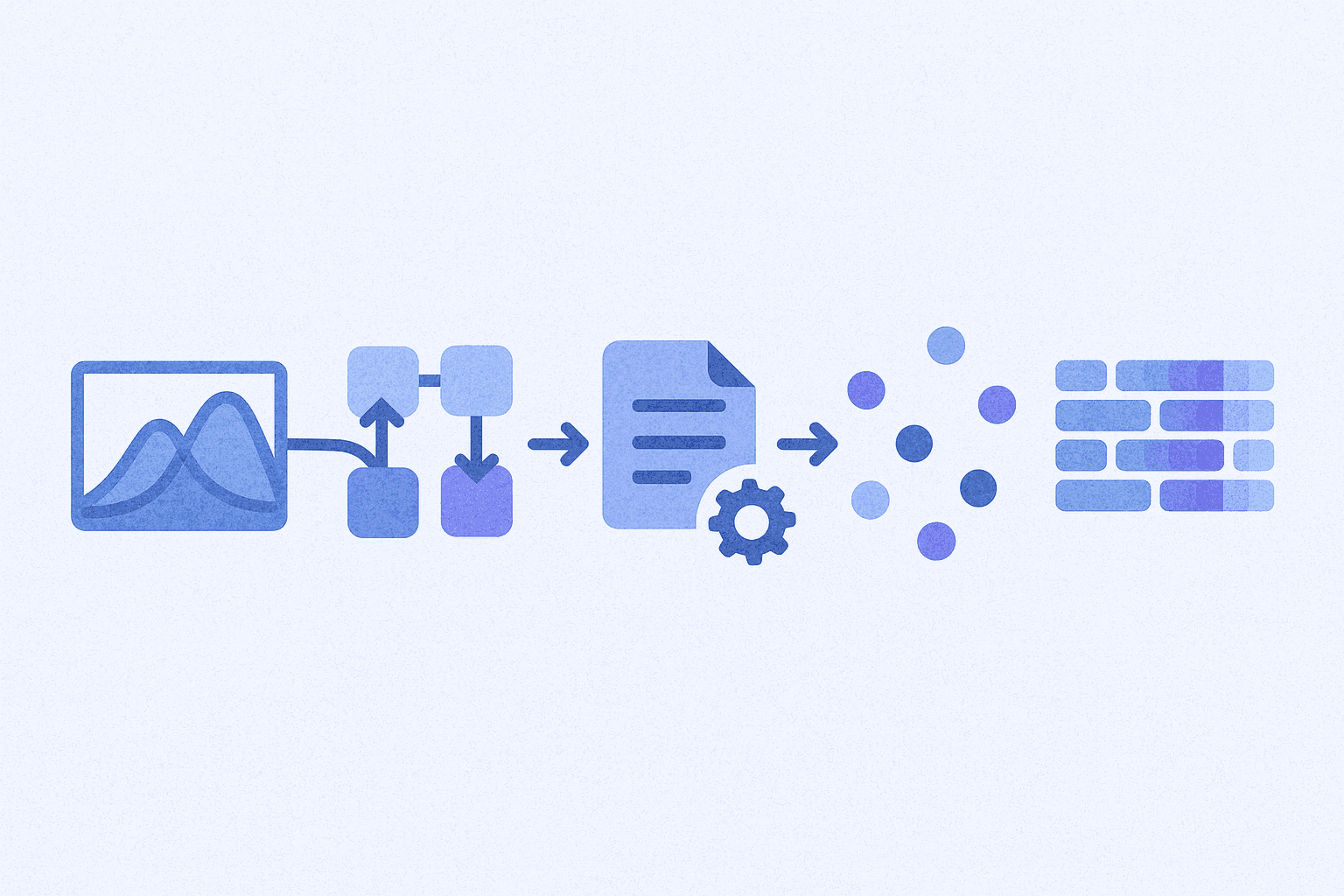

Data processing & augmentation

Language-specific normalisation; EDA, back-translation, LLM-based generation with controlled prompts; dedup & leakage prevention; morphology/lemma sanity checks.

Unsupervised learning

UMAP/t-SNE/PCA visualisation; HDBSCAN/DBSCAN clustering; BERTopic / c-TF-IDF; K-means; elbow-method; outlier detection & data quality audit.

Explainability & analysis

SHAP (token/phrase), attention viz; feature ablation; counterfactuals; calibration (ECE) & threshold tuning.

Robustness & fairness

Noisy-input tests (typos/diacritics), adversarial perturbations; cross-domain generalisation; bias/fairness slice analysis; uncertainty-aware decisions.

LLM adaptation & RAG

Instruction/preference tuning (LoRA); RAG with BM25 / dense / hybrid search, chunking/windowing, cross-encoder reranking; FAISS/pgvector; guardrails and citation-style outputs; context-length vs. latency trade-offs.

Evaluation methodology

Stratified k-fold & temporal splits; macro-/weighted-F1, AUROC/PR-AUC; cost-sensitive metrics; production-like eval (latency, throughput, memory); model cards & decision logs.

MLOps & deployment

FastAPI inference, batching/streaming; Docker with env pinning; monitoring (latency, errors, drift); experiment tracking (mlflow/W&B); reproducibility (seeds, dataset/versioning); lightweight demo UIs.